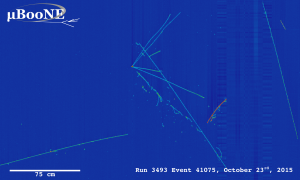

First neutrino event candidates identified by MicoBooNE. The image shows the raw data with some low-level processing and represent the input to the hit finding and particle flow reconstruction (i.e. pattern recognition) phases.

Pattern recognition rules in particle physics. When particles collide, many things happen at the same time and in a very fast sequence within fractions of a second. In order to tell everyday events from rare ones, particle physicists use pattern recognition software to quickly scan and classify pictures from the collisions.

A software development kit and event reconstruction system called Pandora has been helping detector developers design and run pattern recognition algorithms since 2009. Under the AIDA-2020 project Pandora has been enhanced for use in a new project: the liquid-argon time projection chamber for the MicroBooNE neutrino experiment based at Fermilab in the United States.

Pandora was originally developed for tracking particles through calorimeters being designed for the ILC and later the Compact Linear Collider (CLIC). The toolkit was the first to manage the particle flow challenge set by linear-collider physicists: to track and identify every single particle from a collision throughout the whole detector.

Pandora uses a multi-algorithm approach to pattern recognition, in which many small algorithms gradually build up a picture of events. Each algorithm specialises in a specific characteristic or event topology. For the MicroBooNE experiment, Pandora now provides a fully automated reconstruction of neutrino and cosmic-ray events in a very different detector environment to that of the linear collider.

For MicroBooNE, three separate two-dimensional images per event need to be checked by pattern recognition to finally arrive at a three-dimensional representation of the event. This can be tricky, explains John Marshall, one of the Pandora project leaders: “Features are routinely hidden in at least one view when, for example, two tracks lie on top of one another when viewed from a particular angle. Our new algorithms have a sophisticated interplay between 2D and 3D reconstruction, with iterative corrections made to the 2D reconstruction if features do not correspond between the three “views” of the event.” Pandora thus learns from its own algorithms.

In the end, the neutrino interactions can be seen in amazing detail. “They are intrinsically very complicated and frequently difficult to reconstruct,” says Marshall.

“The human brain and eye can normally do a very good job at separating the different particles in the images, but sometimes it’s not easy, even for a human!”

The MicroBooNE detector consists of a time projection chamber filled with liquid argon. When neutrinos generated from a proton beam at Fermilab pass through the dense liquid, they interact with argon nuclei and create an avalanche of secondary particles that ionise electrons in the liquid-argon volume, which then drift to three wire planes at the TPC’s anode. It’s these three planes that deliver the three different two-dimensional images that Pandora helps reconstruct.

Interesting events are used to develop the toolkit further. “In-Pandora visualisation tools allow relevant clusters to be displayed and colour-coded, markers can be added to indicate feature points, lines added to indicate straight-line fits, etc.” explains John Marshall. “This visual approach greatly aids algorithm development. Once an algorithm has been developed in the context of a few events, testing starts to be scaled-up to large event samples. Pandora provides a lot of internal error checking, so any mistakes in algorithm logic are normally identified and highlighted very quickly.”

Pattern recognition is likely to become even more important in the future as images of collisions become more and more detailed with improving detector technologies.

This article was first published in On Track, the newsletter for the AIDA-2020 project.

Recent Comments